In 2018, I noticed a persistent problem in our antimicrobial stewardship (AMS) post-prescription review process.

I'd give feedback to an intern or resident. The recommendation would usually be actioned the same day. Then, weeks later, I'd see the exact same issue appear again.

We were stuck on a hamster wheel.

That experience ultimately led to the development of prescription support pathways, pushing good decisions upstream so post-prescription review wasn't constantly doing damage control. But before we got there, I tried a different experiment.

Together with an extremely talented developer, Toby Maddern, we linked the hospital pathology and medications systems. The setup was a brilliant but janky pathology PDF decoder stitched together with MedChart medications excel files. It was clunky, but it worked.

The output was a clean, daily patient review report that flagged:

- drug–bug mismatches

- renal function versus dose failures

- medication–comorbidity conflicts

- and other high-risk prescribing patterns

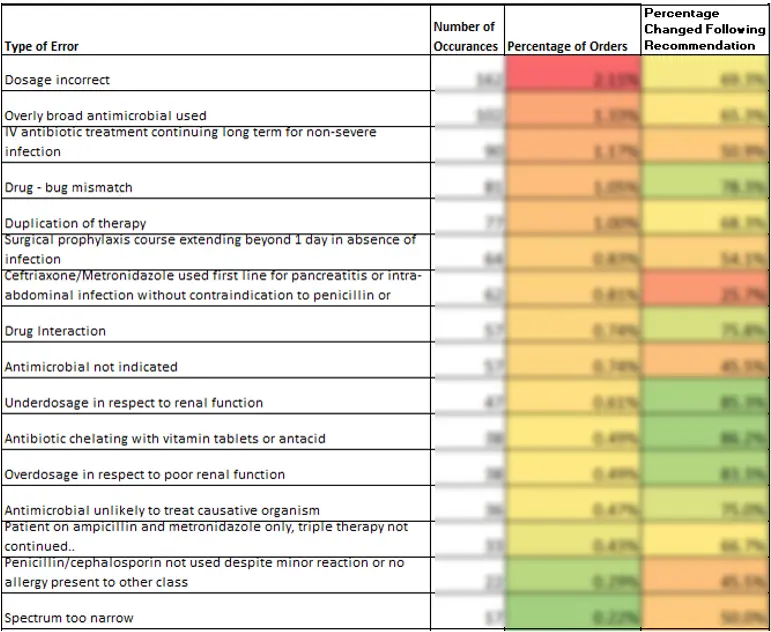

Using this tool, the antimicrobial stewardship team reviewed every antimicrobial prescription written across four hospitals over a 12-month period.

The system automatically recorded:

- the prescriber

- the responsible consultant

- our review notes on what went well and what didn't

By the end of the year, we had just over 10,000 antimicrobial reviews, with any review graded as "suboptimal" double-checked by an infectious diseases registrar or consultant before feedback went to the team. That dataset was something new.

The Individual Feedback Reports

I built an exporter that converted those audit results into individual feedback reports. Each consultant or specialist received a summary showing:

- their prescribing profile

- the proportion of scripts rated optimal versus needing improvement

- how they compared with their peer group

This is where things got uncomfortable.

When Feedback Hits Senior Staff

The project nearly died before it started. Release of the reports was debated for quite a while in the infectious disease meeting, and without the backing of the infectious diseases director, it wouldn't have gone ahead—and for good reason.

Imagine being a senior specialist with 40+ years of experience and receiving an audit report containing the opinion of a relatively junior AMS pharmacist +/- an ID registrar.

Some loved it. Some hated it.

After the reports went out, my job got noticeably more stressful.

One senior physician fired back a series of emails, systematically explaining why many of the "suboptimal" ratings were wrong—and, to be fair, he was right on several counts. He escalated the issue to the internal medicine meeting and director of medical services and requested the practice stop.

At the same time, a vascular surgeon absolutely loved it. He and his colleagues shared their reports, compared results, and actively competed to be ranked least inappropriate. I was invited to present the findings at the surgical division senior meeting.

Following a combination of hate mail and praise, I got an email from an ENT surgeon asking for a meeting. I was dreading that meeting...

I walked in expecting confrontation. Instead, the entire ENT team was there for a quality improvement session. We reviewed each case together, discussed the clinical rationale behind decisions, and debated what "optimal" actually meant in each scenario. I learned a lot, they learned a lot. It was extremely positive.

The Unexpected Outcome

The most important outcome wasn't improved prescribing metrics. It was bidirectional learning and a shared understanding.

We learned far more about the real-world drivers behind specialist decision-making. And for the first time, specialists genuinely engaged with the AMS perspective—not as rules imposed on them, but as a conversation.

We got things wrong. There were disagreements. Personality mattered. Some specialists enjoyed benchmarking and competition. Others saw the reports as an attack on autonomy.

That's the reality.

Promoting antimicrobial stewardship is more social science than clinical science.

Refining the Approach

In subsequent years, we adjusted the model:

- added explicit disclaimers acknowledging that reviews reflected the information available at the time

- broadened peer groups to protect anonymity in small specialties (for example, rolling ENT into a broader surgical cohort)

The teething problems were real, but the initiative was overwhelmingly positive.

It improved prescribing. It improved relationships. And it made us a better stewardship team.

Where This Leaves Us Now

We haven't built individual feedback reporting into Clinical Branches yet. Not because we can't, but because this approach requires maturity.

The technical foundations are already there.

Today, we could do it properly:

- self-serve dashboards for teams and specialists

- transparent benchmarking

- AI-assisted report narratives that provide context rather than judgement, without any human middleman (respecting team's privacy)

What we're waiting for is the right partner: a stewardship team confident enough to engage with discomfort and committed to meaningful behaviour change.

Looking Ahead

If you're working in stewardship, quality improvement, or behavioural research, and you're willing to ruffle a few feathers, we'd be keen to talk.

A little bit of disagreement can be a good thing—it is where we learn and where the improvement starts.

Ready to Improve Stewardship Outcomes?

If you're interested in implementing individual feedback reporting or exploring how data-driven quality improvement can transform your stewardship program, we'd love to hear from you.

Get in Touch